By Chris Ullrich, CTO at Immersion

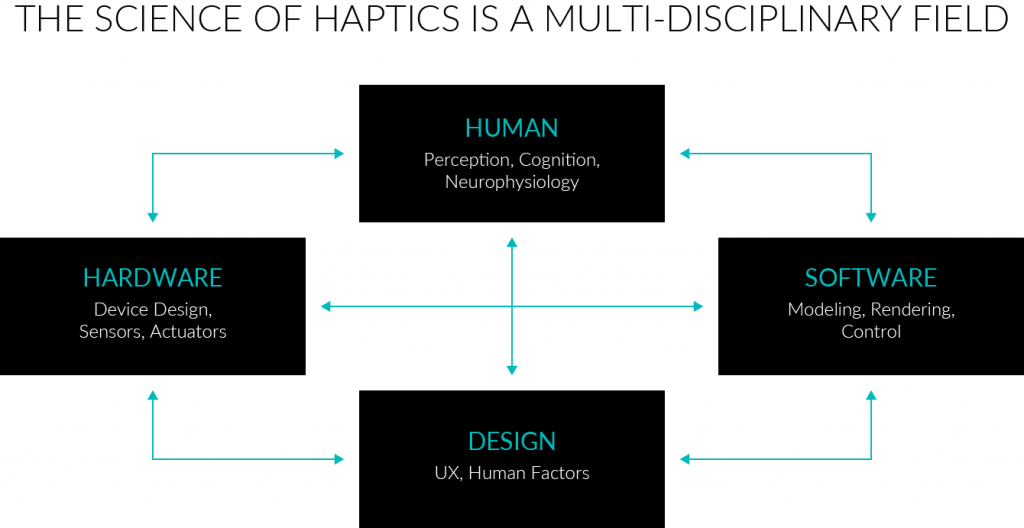

New use cases for haptic technology in various markets, including automotive, gaming, IoT, PC and virtual reality, has led to more interest in developing haptic experiences and products with haptic feedback. But jumping into developing a product with haptics is not as simple as it seems. Implementing haptic technology is a multi-layered experience that requires the full system solution to work harmoniously together in order to produce the best experience.

The performance of each individual layer – Design, Software, and Hardware are intertwined and highly dependent on each other. This is what we call the haptic technology stack.

The haptic stack framework, developed by Immersion, helps planners and product leads think about the elements and the types of skills and vendors needed to execute a successful product that includes haptics. A good understanding of the haptic technology stack is essential. This article provides an overview of the technology layers in the haptic stack to enable high-quality user experiences across all markets.

This is the first in a series of technical articles that will dig into haptic technologies, their creation, and their correct usage.

Haptics is a system-level feature that can impact the higher-level UI design. Because of this, it can be surprisingly complex to integrate all the different levels correctly. Knowing which parts of the haptic stack are better to build and which are better to buy can be the difference between a product that offers best-in-class haptics and one that fails to be competitive. It is critical that a system-level product development structure is used. At Immersion, our product teams include software, hardware, and UX practitioners who have a deep understanding of the physiology of human sensation. These elements are all needed to deliver on a promise of high-quality, satisfying, and useful haptics.

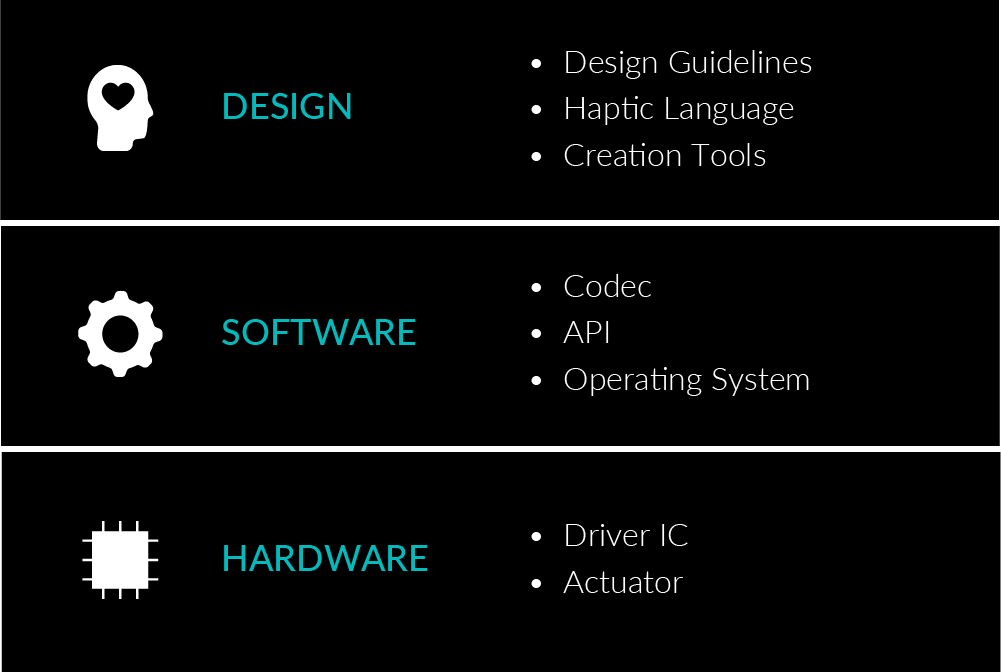

As noted earlier, the haptic technology stack is divided into three elements: Design, Software, and Hardware, as shown in the figure below.

Hardware

Starting at the bottom of the haptic stack, the hardware layer consists of at least two elements – an actuator and a driver or amplifier. The actuator is the part of the system that converts electrical signals into acceleration or force feedback. There are many types of actuators (I’ll present a framework for thinking about actuators in a future post); the actuator is one of the most important (and expensive) parts of a high-quality haptic system. Along with the actuator, it is necessary to have a driver IC or amplifier that can convert haptic effects into drive voltage. Drivers and actuators need to be chosen together to ensure the best possible haptic experience.

Broadly speaking, actuators are divided into vibrotactile (vibrating) and kinesthetic (force feedback). Most commercial embodiments use vibrotactile feedback, but some gaming and automotive products also include kinesthetic feedback. Vibrotactile feedback is the type of feedback found on every mobile phone and game controller. Kinesthetic feedback is able to push on users or provides some type of resistance to motion; rotary knobs found in some automotive human-machine-interfaces (HMIs) are kinesthetic devices. It is important for product designers to think about the informational and experiential goals of tactile feedback when deciding what type of mechanical system to implement. Hardware is by far the most expensive component and the most difficult to change late in the product development process.

Software

The software layer is the part of the haptic system that is responsible for ensuring multimodal consistency, decoding effects, and timing/scheduling effect playback. It is the part of the haptic stack that manages the haptic experience in real-time. The software layer must create command signals for the driver IC to convert into electrical signals to control the actuator. Both Apple and Google provide a haptic software layer in their respective mobile operating systems. In particular, Google’s Android OS provides a standard hardware abstraction to allow 3rd party driver ICs to deeply integrate into the OS. In console gaming, the platform provider (e.g., Sony/Microsoft/Nintendo) provides a haptic SDK and API since these are vertically integrated systems.

To produce high-quality haptic experiences, it is critical that the software layer has performance-oriented functionality. For example, if a game is playing haptics and sound effects simultaneously, it is known that users will only associate these two stimuli together if they happen within about 50ms of each other. For this reason, haptic rendering should happen as low in the technology stack as possible (ideally firmware and kernel level), to eliminate latency and user confusion during multimodal experiences. Immersion consults with many of our chip and device partners to help facilitate the correct choices at this level of the stack.

Design

At the top of the haptic stack is the design layer. This can include content creation tools as well as the design framework for the haptic effects. Haptic effects need to have clear meaning and purpose for the HMI to be considered successful. Haptics must be thought of along with audio and visual feedback in the context of the overall experience. Do you want your virtual button to feel like a pager from 1988, or like a tap or click? Sadly, there are not a lot of solutions on the market for design tools. A dearth of design tools and a lack of software APIs and standards can make haptic design challenging. At Immersion, we continue to invest in working with the industry to bring new design solutions to the market that will harmonize the ecosystem.

System Synergies: Putting It All Together

The most important component of the haptic stack isn’t a component; it is the integration of these three layers into a single, clean functioning unit. At Immersion, we’ve worked in many different markets and have seen the challenges and pitfalls that can appear at the integration phase. Because a successful haptic experience relies on the entire stack, it is essential that the pieces be put together and optimized by a skilled practitioner to ensure that the entire haptic stack is functioning as expected in harmony with the other experience elements. Much of the character and quality of haptic experiences are not precisely captured in the spec sheets and data sheets of component vendors, so it is essential that the overall experience is considered at all stages of product development and production. Due to a lack of performance standards, product managers and buyers need to be very thoughtful about swapping out stack elements after a haptic experience has been developed.

Example

At CES 2020, Immersion and TDK jointly demonstrated a 15-inch haptic touchscreen for automotive. The build for this system follows the haptic stack model. Here’s the quick overview.

Hardware: The large screen demonstrator uses a Piezo (PZT) type actuator, which is extremely high fidelity and a responsive actuator that was sized for the moving mass in this system (about 1.2kg). Immersion developed a custom driver IC solution as there are currently not a lot of off-the-shelf PZT drivers that met our performance needs (we’re hoping that will change in 2020!).

Software: This system runs a version of Android OS but also has STM32-based Immersion firmware to manage haptic effect synthesis and playback. The firmware also incorporates Immersion’s Active Sensing Technology (AST) that enables extremely sharp transient haptic sensations. For systems that are rendering high-fidelity haptic transients (common for button replacement use cases found in automotive), the firmware is the only way to provide the right level of timing and control.

Design: The system was designed and developed using Unity and some custom effect tools that Immersion uses internally. Immersion has a proprietary haptic encoding system that allows designers to quickly create, encode, and playback haptic effects on a running demonstration. This setup allowed our team to rapidly iterate the interactions, visuals, and haptics to get to a great end-user experience.

Integration: It is important to note that the overall performance goal of this demonstrator was determined early in the development process and continuously revisited and reviewed against each technical development. At system integration, there was still a need to iterate the stack elements a few times to optimize the experience. This is a normal and expected part of developing a high-quality haptic product.

In summary, this example is a high-level overview of the haptic technology stack at work. Immersion uses a much finer-grained model internally, but these stack components are the essentials and hopefully provide a starting point for product architects and planners. In future posts, we’ll dive into each of these layers in more detail and talk about some of the key vendors, their performance considerations, and some areas that are ripe for innovation.

Related articles: